About Me CV Research GitHub Google Scholar

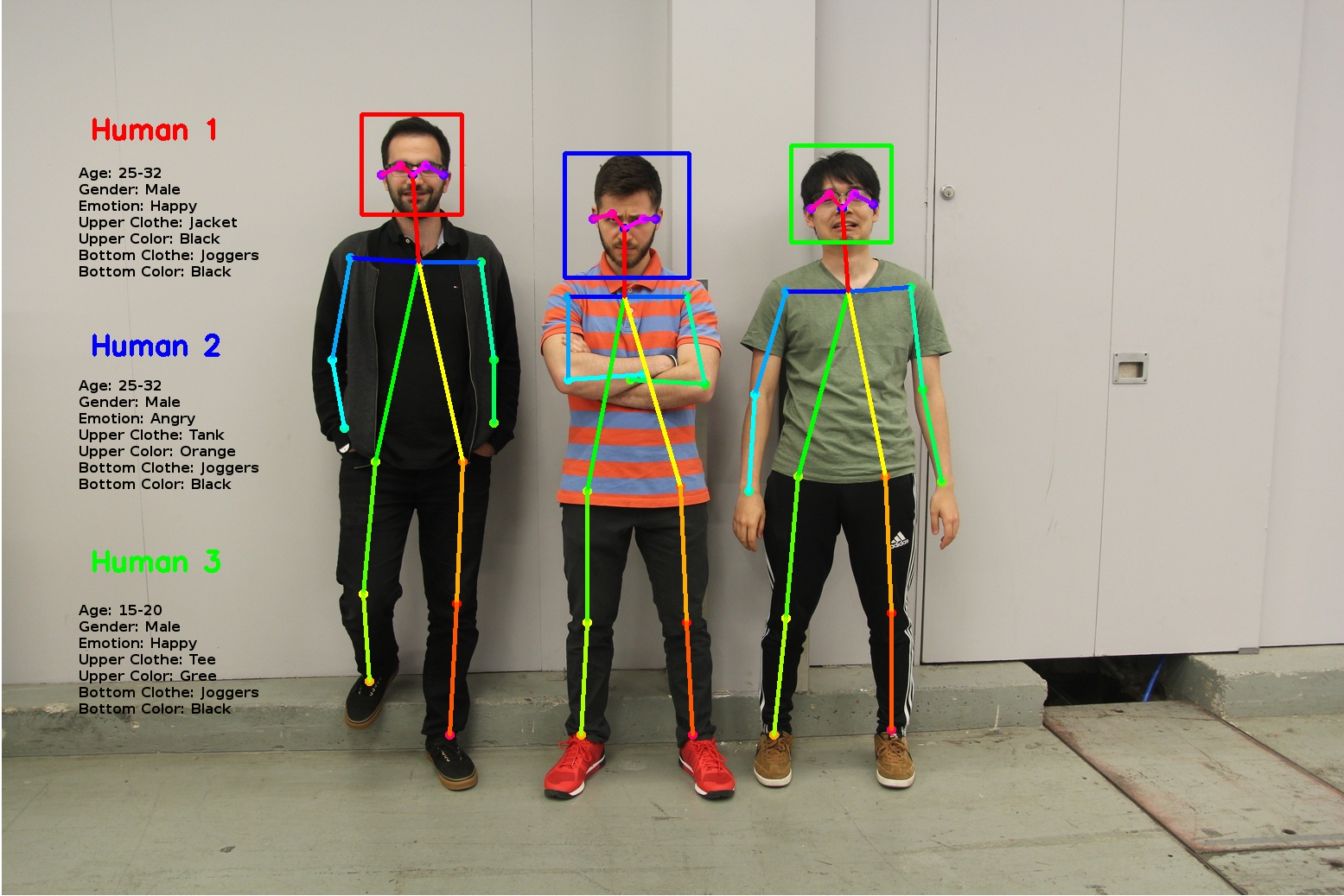

Pose Estimation and Person Description Using Convolutional Neural Networks

Predictions are made using OpenPose to detect humans and their body parts, which are then subjected to various deep convolutional classifiers.

OpenPose for Person Detection and Segmentation

OpenPose was used to find the keypoints (i.e. right elbow, left knee) of each person in the camera frame. Each person could then be segmented by their head, torso, and legs. Using three convolutional classifiers, their gender, age, and emotion could be inferred from their face. Using the DeepFashion dataset by the University of Hong Kong, I trained an InceptionV3 model to recognize the clothes in a picture. By feeding this model images of each person's torso and legs, their upper and lower clothes could be inferred. Finally, by using a color histogram conditioned on pixels near particular keypoints, the color of each clothing could also be inferred.

DeepFashion Classifier

I trained an InceptionV3 as well as a YOLOv2 model on the DeepFashion dataset. Ultimately, the InceptionV3 classifier was used, and was fed the upper and lower halves of each person detected by OpenPose.

Demonstration of Person Description using the Toyota HSR.