About Me CV Research GitHub Google Scholar

Translating Verbal Commands into a Sequential List of Tasks for Robots

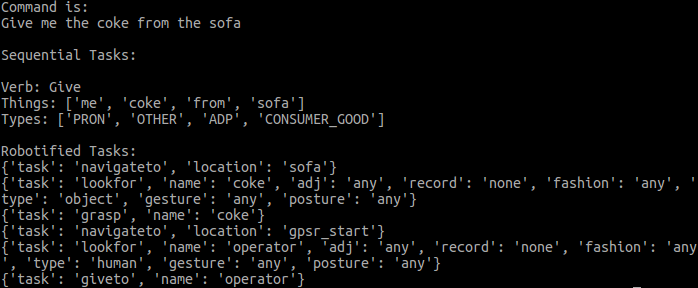

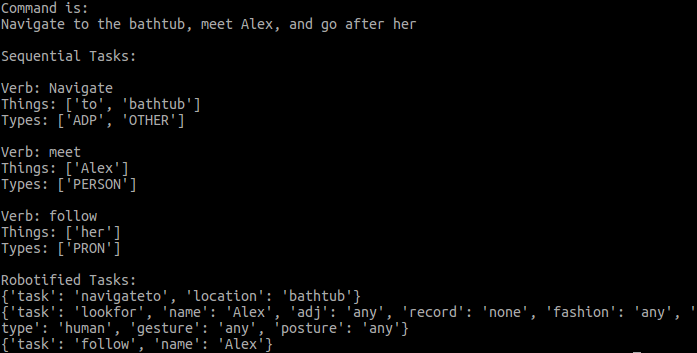

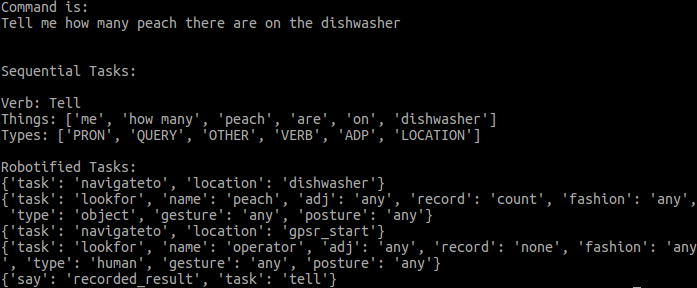

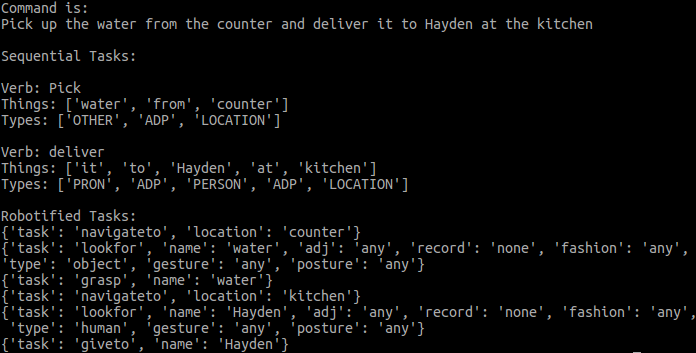

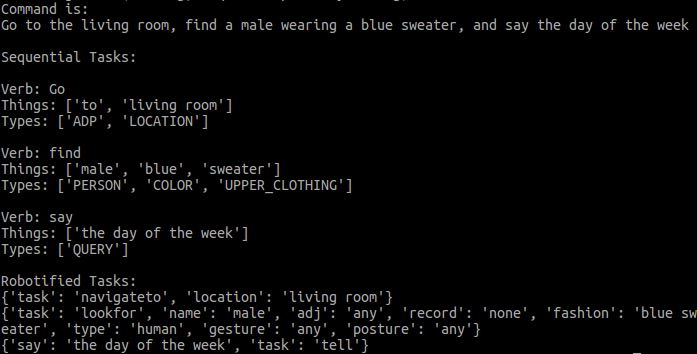

Example of a verbal command being translated in to a list of simple tasks for the robot to perform.

Commanding robots to perform tasks verbally

To translate speech into discretized tasks for the robot, I utilized a combination of various neural networks (NN) specialized for natural language processing (NLP). First, I used Google’s Speech NN API in order to convert audio into text, and to detect hotwords to notify the robot that a command is being given. I then used Google’s NLP NN API to assign each word in the sentence a label (i.e., verb, location, pronoun, etc.) based on the context provided by the sentence (noun vs. verb, ex. the milk vs. to milk). I used this to construct a sequence of tasks for the robot to perform, using verbs as a cue to determine the robot task type and the nouns as cues for locations or corresponding object classes. To classify verbs and nouns that are not explicitly in our dictionary, I used Google’s Word2Vec NN to match it to a similar verb in our dictionary. To do this, I computed the cosine between the input word’s vector representation to each of the vectors of the words in our dictionary, and check whether or not the largest cosine computed passes a certain threshold. To determine whether or not a perceived sentence is a special predefined command, I used the Levenshtein distance to compare it to our list of known pre-defined special commands. Tasks extract related information from the HSR’s sensors and known state of the environment during execution to determine feasibility and success.