About Me CV Research GitHub Google Scholar

Rapidly Creating an Effective, Labelled, and Diverse Training Set for Object Detection using Deep Learning

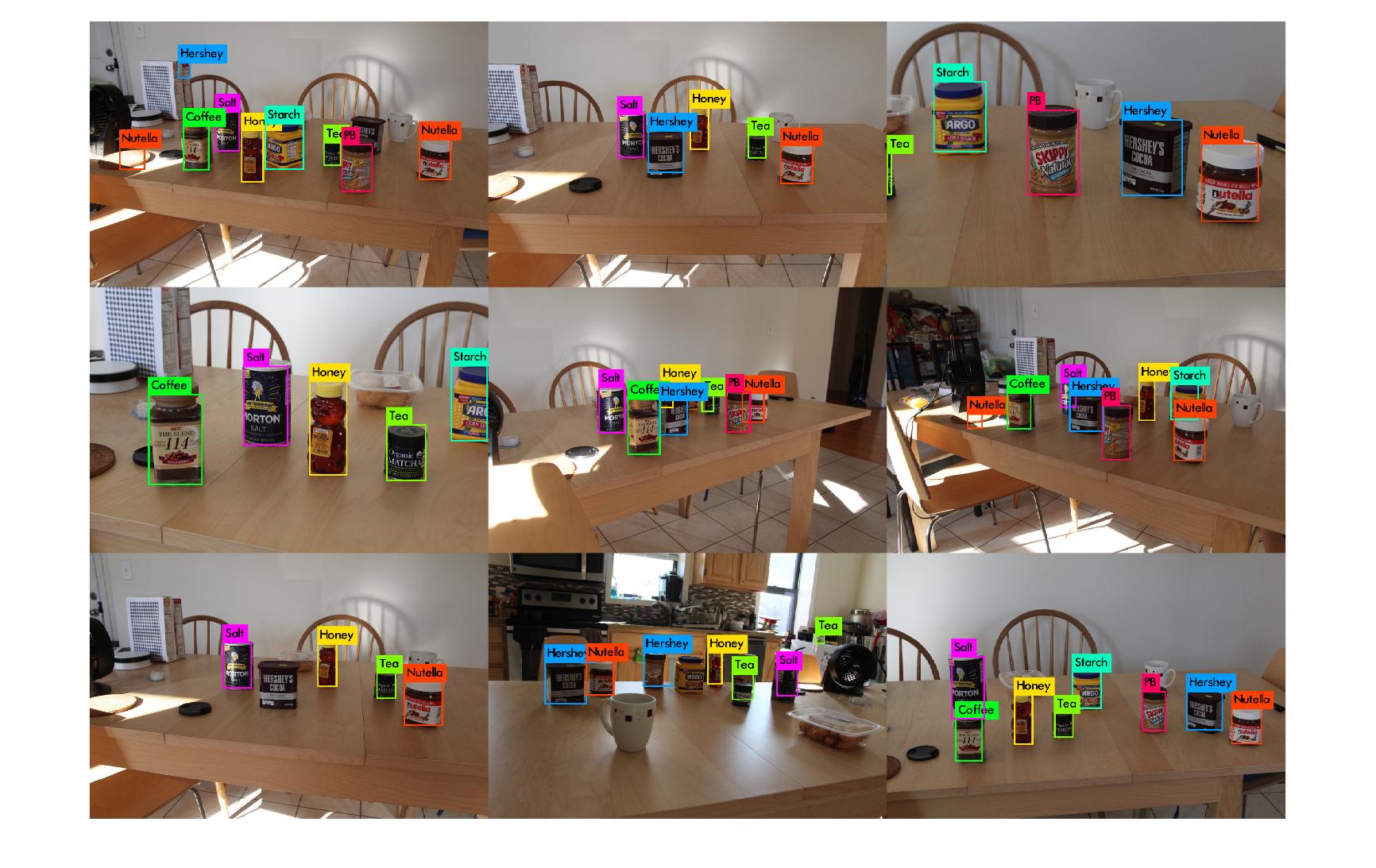

Test results after transfer learning using a model pre-trained on ImageNet with the softmax layer and final convoutional layers left unfrozen

Quickly creating an effective training set for detecting new objects

I did this work as a part of Northeastern's Robocup team to teach our robot to detect new objects overnight. Teams are only allowed to train robots within 1-2 days of the competition.

A challenging problem with detecting custom objects in an image using deep learning, especially within a short time frame, is the lack of labeled training data. In order to rapidly train our neural network to detect new objects, I recorded videos of the objects rotating on a circular electric turntable using a camera mounted on a tripod. Therefore, I could use background subtraction to extract the object from every frame in the video, and obtain both tight bounding boxes and a large and diverse set of views of the object. These extracted images of the objects were then placed together on a background image of scenery. The scale and placement of the objects were randomized (but occlusions were prohibited), and the resulting composite image's brightness, blur, and noise were also randomly distorted. The locations and labels of each object were saved with every composite, coming together to form an expansive training set. By including multiple objects in each training image, more mileage can be gained from each photo by allowing the backpropagation to update more weights with every batch.

I chose the YOLOv2 object detection network because of its speed and state-of-the-art accuracy. The model was pre-trained on ImageNet, with most of the weights kept frozen during training. The final classification layer as well as the final convolutional layer were unfrozen.

The first frame (with no object in it) is used to perform background subtraction on the rest of the frames to extract the object.