About Me CV Research GitHub Google Scholar

Rapidly Creating an Effective, Labelled, and Diverse Training Set for Object Segmentation using Deep Learning

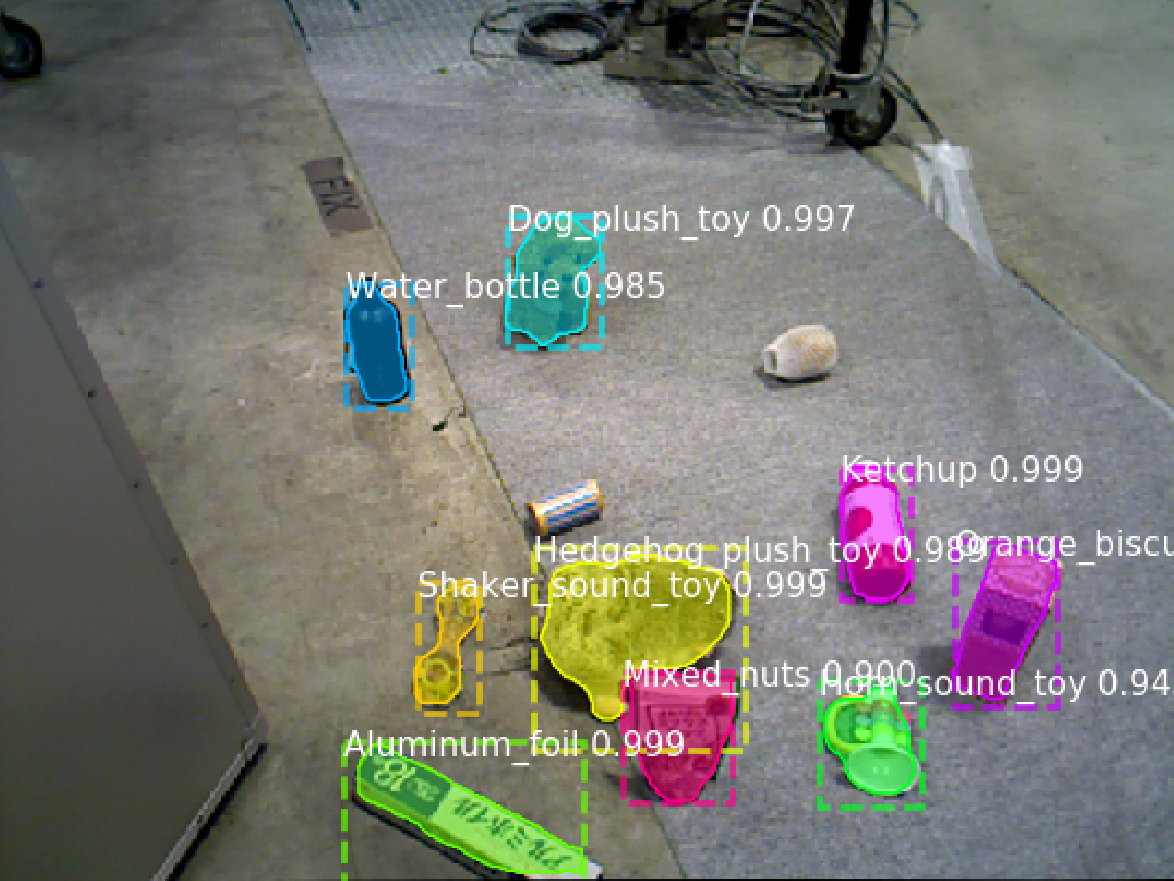

After training, our model was robust in detecting objects regardless of size, proximity to the camera, orientation, occlusions, lighting, and noise.

Rapid Artificial Training Data Generation

To detect and manipulate objects with our robot at RIVeR, we used the Mask R-CNN framework for object detection and segmentation. In order to gather a sufficiently large amount of training data in an efficient and rapid manner, our approach involved generating an exhaustive artificial training set afflicted with various types of noise, inspired by OpenAI's paper on domain randomization (https://arxiv.org/abs/1703.06907). By adding noise that may appear in the images taken by the camera, we train our object detection model to be more robust against them during inference.

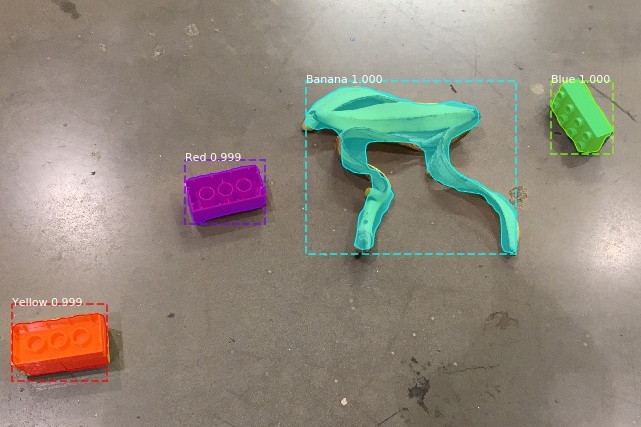

To generate the artificial training images, videos of each object rotating atop an automatic turntable are recorded. This is done at various camera elevations to capture all angles of the object from different perspectives. Background subtraction is then performed on each frame of the video in order to extract all contours of the object. These extracted contours are then randomly chosen, scaled, and rotated, before being placed on an image of scenery similar to the types of environments the robot would operate in. For each image, randomly chosen contours from a few randomly chosen classes are used to teach our model how to best distinguish the objects from each other when they appear together, which is especially helpful for objects that are similar in appearance. Occlusions, up to a certain percentage threshold, are allowed and are properly recorded when annotations are created. Each of these composite images are then afflicted with random adjustments in lighting and artificial image noise. Once a composite is generated, a corresponding annotation that records the object instance’s class and the pixel-wise contour is created in COCO format. As a result, after training, our model was robust in detecting objects regardless of size, proximity to the camera, orientation, occlusions, lighting, and noise.

Using the segmentation masks, we were able to precisely segment each objects point clouds in 3D space, and successfully compute grasps in cluttered environments. This approach was implemented in the World Robot Summit in Tokyo.

Results

Examples of artificial training data, exhibiting changes in noise

Lego blocks and a banana

Groceries to sort

Robustness to changes in orientation and occlusions. Inferences were generated on each frame after the video was recorded, they were not done in real-time.

Demonstration of real-time detections using the Toyota HSR.